Hi,

i am looking for ideas and ways to optimize the UUID generation when creating pages programmatically, which for me feels like a bottleneck.

My current scenario is, on my Dev Site, i am playing around with about 500+ Pages in multiple Child/Grandchild Folders, which include 50.000+ images.

- Single Pages are called via page:// in e.g., certain Routes (works quickly when the uuid is populated

- Images (e.g. Gallery) are being run through via URI for faster search, but in addition, the UUID is also being used at certain logics, the UUID is also saved within Pages (e.g. structure)

While the regular loading once the UUID is already populated seems to be very smooth, it really takes up to 15-20 seconds to get the initial UUID generated because it will run through all UUIDs to not collide. Thus, the more pages (and Files?) are available, the slower the UUID population will become?

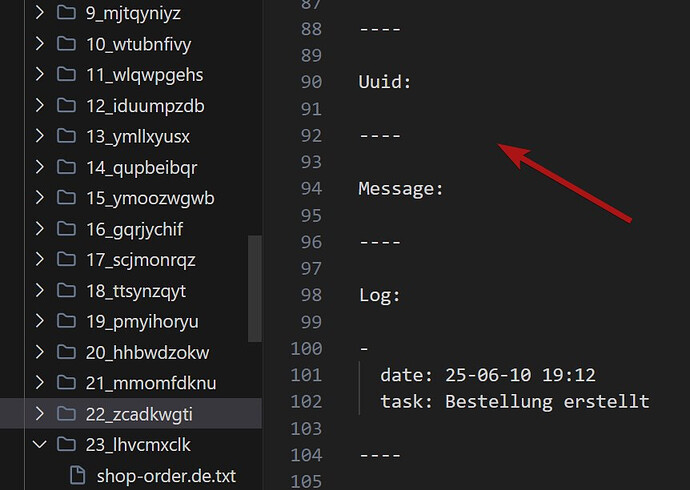

When i observe the new createChild file, then:

- the content file and it’s data is generated quickly, UUID empty at first, and then populated 15-20 seconds later. Until then also the $page->changeStatus(‘listed’) will be delayed.

- when pre-setting the UUID, the UUID-Field will already have a value, this value will not change later. But the $page->changeStatus(‘listed’) will still be delayed

- using Page::create() I can skip the draft / changeStatus and generate a Page with my pre-set UUID, the file is finished very fast and the runtime continues with other tasks as long as I don’t call the $page->uuid(), which would also lead to the bottleneck:

$p = Page::create([

'parent' => $parent,

'isDraft' => false,

'status' => 'listed',

'num' => $data['date'],

'slug' => $slug,

'template' => 'some-template',

'content' => $data, // Data also includes $data['uuid'];

]);

// Example: if later i want to redirect to that page

// loading will be delayed until the UUID has been populated.

I have already made different attempts to tune it up by not using page->uuid() immediately or pre-filling the uuid to try and force it not to run through the uuid index. But it’s still rather an unacceptable result when the population itself still takes 10+ seconds. And while pre-setting a UUID, it still seems to run a cross-check once the page is accessed for the first time.

I have also tried putting the UUIDs in a cache

- (v5 RC-3) redis cache

- sqlite cache

- file cache

<?php return [

'pattern' => 'cache-uuids',

'action' => function () {

foreach ($kirby->site()->index(true) as $page) {

foreach ($page->files() as $file) {

if($file->uuid()->isCached()) continue;

$file->uuid()->populate();

}

if(!$page->uuid()->isCached()) $page->uuid()->populate();

}

}

];

Inside the File / SQLite or Redis DB, I can see how all 50k+ entries appear to be cached.

But it still is rather slow. Is it even using the cache to check for the next UUID?

Disabling content.uuid is also not really what I want.

To split it up into multiple steps, I have also tried to save the data in a JSON file first, then run a cron job in the background to createChild based on all available JSON files. The JSON file was created very fast, but the cron job will have to process the population. This idea would work. But the application flow would be different and delayed.

Is there any way to skip running through the whole index and make Kirby just accept the pre-filled UUID as it is?

Are there other ways to decrease the time required for the UUID generation/crosscheck?